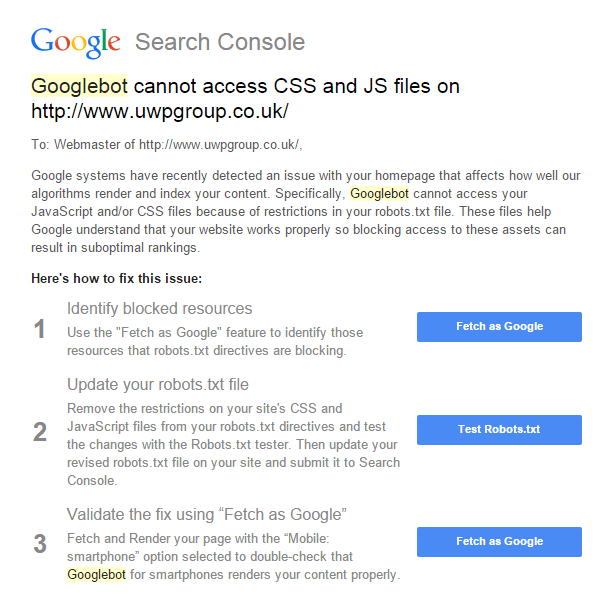

A number of our clients have received a message from the Google Search Console warning that Googlebot cannot access CSS and JS files on their site.

And they’re not alone.

According to Barry Schwartz of SearchEngineLand “many, many” sites will have been impacted.

Judging from the comments on Schwartz’s post a lot of people are struggling to get their heads around the issue and those with WordPress sites appear to be the most affected.

If your website is one of those on which Googlebot cannot access CSS and JS files, we’ve got the lowdown on what the issue is, and what you need to do.

Why are crawlable CSS and JS files important?

Check out the updated Fetch and Render tool on the Search Console to see why they’re important to your site.

When you submit your site’s URL to the tool it will show how the page looks to a regular user in comparison to how it looks to Googlebot.

If you have any blocked resources on your site, it’s clear why they are so problematic.

Additionally, if CSS is blocked, Google might not be able to tell your site is mobile – something that’s crucial these days.

So, if Googlebot cannot access CSS and JS files on your site you need to get it sorted.

Did we know this was coming?

It’s important for SEO companies like ourselves to keep on top of any Google algorithm changes – of which there are several hundred a year now.

Google updated their webmaster guidelines last autumn and did warn webmasters that blocking CSS and JavaScript might result in “suboptimal rankings”.

The world’s No.1 search engine are essentially just reinforcing that message with notifications via email and the Search Console.

One reason for this is Google clamping down on low quality sites.

For example, Google runs page layout algorithms to determine how many ads you have.

One aspect of your site it will check is how many ads you have above the fold. If you have too many, that’s not a good thing and can hurt your rankings.

In the past blocking your CSS was touted as an “easy” way of getting away from such an issue.

Again, we are seeing a tightening of the Google algorithm, as they punish sites for spammy content.

What’s changed?

As we mentioned, Google has been looking negatively upon sites where Googlebot cannot access CSS and JS for some time now.

However, it would seem their focus on this matter has definitely stepped up a notch.

We would expect this to see this impacting more sites – so it’s definitely something to address sooner rather than later.

The guys at Yoast have linked Google’s notification to the “Mobilegeddon” update – and suggest that it is still rolling out.

So, best to be keeping your ear to the ground, as we are, and monitor developments.

How to fix it

If you have a WordPress site you can simply use the Yoast SEO plugin. Easy peasy.

For those without Yoast, you must add the following to your robots.txt to allow Googlebot to crawl all the CSS and JavaScript files on your site:-

– User-Agent: Googlebot

– Allow: .js

– Allow: .css

Google doesn’t index .css and .js in their search results, so there are no potential SEO issues.

Indeed, it’s clearer than ever that not taking this action could negatively affect your rankings.

You can confirm it’s worked using the “Fetch as Google” tool in the Search Console to ensure the files were successfully unblocked with the new robots.txt file.

Summary – Googlebot cannot access CSS and JS files

Google’s recent notification is a sign of the search giant moving further away from only crawling the text elements of websites.

Expect to see further similar updates in the future.

For now, make sure you’ve allowed Googlebox access to CSS and JS files.

If you need any help with this do let us know. We’d be happy to help.

Should there be any further developments, we’ll let you know.